AI Server Demand Explodes, Nvidia GPU Reigns Supreme

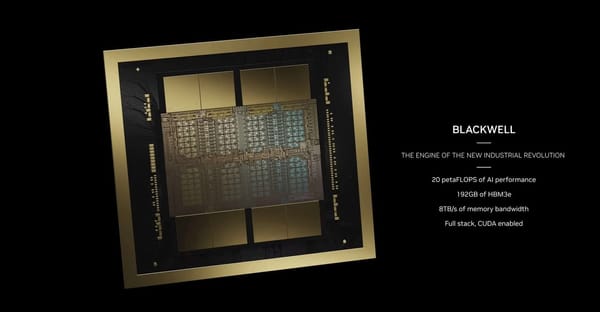

According to a report from Morgan Stanley cited by United Daily News, Nvidia and its partners will charge roughly \(2 million to \)3 million per AI server cabinet equipped with Nvidia's upcoming Blackwell GPUs. The industry will need tens of thousands of AI servers in 2025, and their aggregate cost will exceed $200 billion.

根據《聯合報》引用摩根士丹利的報告,Nvidia 及其合作夥伴將對配備 Nvidia 即將推出的 Blackwell GPU 的 AI 伺服器機櫃收取約 200 萬至 300 萬美元的費用。到 2025 年,業界將需要數萬台 AI 伺服器,其總成本將超過 2000 億美元。

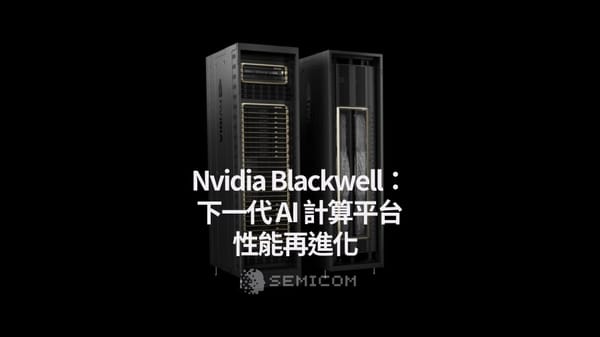

So far, Nvidia has introduced two 'reference' AI server cabinets based on its Blackwell architecture: the NVL36, equipped with 36 B200 GPUs, which is expected to cost from \(2 million (\)1.8 million, according to previous reports), and the NVL72, with 72 B200 GPUs, which is projected to start at $3 million.

到目前為止,Nvidia 已經推出了兩款基於其 Blackwell 架構的「參考」AI 伺服器機櫃:NVL36,配備 36 顆 B200 GPU,預計價格從 200 萬美元起(根據之前的報導為 180 萬美元),以及 NVL72,配備 72 顆 B200 GPU,預計起價為 300 萬美元。

NVL36 and NVL72 server cabinets (or PODs) will be available not only from Nvidia itself as well as its traditional partners, such as Foxconn, the world's largest supplier of AI servers, Quanta, and Wistron but also from newcomers, such as Asus. Assuming that Nvidia's partner TSMC can produce enough B100 and B200 GPUs using its 4nm-class lithography process technology and packaged using its chip-on-wafer-on-substrate (CoWoS) technology, availability from newcomers should ease the tight supply of actual Blackwell-based machines.

NVL36 和 NVL72 伺服器機櫃(或 POD)將不僅由 Nvidia 自身及其傳統合作夥伴提供,如全球最大的 AI 伺服器供應商富士康、廣達和緯創,還將由新進者如華碩提供。假設 Nvidia 的合作夥伴 TSMC 能夠使用其 4nm 級光刻工藝技術生產足夠的 B100 和 B200 GPU,並使用其晶片在晶圓上的封裝技術(CoWoS)進行封裝,來自新進者的供應應該能緩解實際基於 Blackwell 的機器的緊張供應。

Based on the UDN report citing Morgan Stanley, Nvidia anticipates shipping between 60,000 and 70,000 B200 server cabinets, each priced between \(2 million and \)3 million next year. It translates to an estimated annual revenue of at least \(210 billion from these machines, which means that companies like AWS and Microsoft will spend even more. It brings us to math by Sequoia Capital partner David Cahn, who believes that the AI industry has to earn around \)600 billion to pay off machines and data centers.

根據 UDN 報導引用摩根士丹利的消息,Nvidia 預計明年將出貨 60,000 到 70,000 個 B200 伺服器機櫃,每個價格介於 200 萬到 300 萬美元之間。這意味著這些機器的年收入預計至少為 2100 億美元,這也意味著像 AWS 和微軟這樣的公司將花費更多。這引出了 Sequoia Capital 合夥人 David Cahn 的數學,他認為 AI 產業必須賺取約 6000 億美元來償還機器和數據中心的成本。

Demand for AI servers is setting records and will not slow down any time soon, which will benefit both makers of AI servers and developers of AI GPUs. Despite the influx of competitors, Nvidia's GPUs are set to remain the de facto standard for training and many inference workloads, which benefits the company.

對 AI 伺服器的需求創下紀錄,並且在短期內不會放緩,這將使 AI 伺服器的製造商和 AI GPU 的開發者雙雙受益。儘管競爭者不斷湧入,Nvidia 的 GPU 仍將成為訓練和許多推斷工作負載的事實標準,這對該公司有利。

Based on talks with industry sources, the same Morgan Stanley report revealed that international giants such as Amazon Web Services, Dell, Google, Meta, and Microsoft will all adopt Nvidia's Blackwell GPU for AI servers. As a result, demand is now projected to exceed expectations, so Nvidia is prompted to increase its orders with TSMC by approximately 25%, the report claims.

根據與業界消息來源的交談,同一份摩根士丹利報告顯示,亞馬遜網路服務、戴爾、谷歌、Meta 和微軟等國際巨頭將全部採用英偉達的 Blackwell GPU 用於 AI 伺服器。因此,需求現在預計將超出預期,因此報告聲稱英偉達被促使將其對台積電的訂單增加約 25%